Renault-Nissan Mediated Spaces

As part of my time as an Interaction Design Engineer at the Renault-Nissan-Mitsubishi Alliance Innovation Lab in Silicon Valley, I explored novel use cases and user experiences for autonomous vehicles. The diverse projects exposed me to a range of mechanical design and CAD skills to simple programming to enable proof-of-concept prototypes with Processing, TouchDesigner, and Unity. For example, for one project, I mapped and implemented the system architecture for a complex suite of technologies to enable communication via Open Sound Control via the osc4py3 python library for an immersive, mediated multi-projector environment.

I improved the user experience by simplifying interactions whenever possible. I also optimized the system architecture (MadMapper, TouchDesigner, Unity, RaspberryPi) to allow for near-automatic operation, simplifying wiring and integrating electronic components easier system startup; and UI development to make operation and setup more accessible to non-technical team members.

· Design and develop mechanical and software solutions for hardware integration to enable user testing of proof-of-concept prototyping of user interactions and experiences.

· Leverage videocoding, observational research, and user interviews to understand user contexts and discover pain points to better gain insights to public perception of driverless vehicle technologies and improve passenger experiences within these vehicles.

· Plan, execute, and iterate immersive environment and interactions (Python, Google AIY, TouchDesigner, Processing) for proof-of-concept prototypes and live demos to CXO's from Nissan-Japan and Renault-France.

· Use python to clean raw car telemetry data; reduced filesize by 70% (more than 700,000 entries). Use OSC and Syphon to stream telemetry data and video content in realtime over multi-device setup.

Initial testing setup with five projectors:

Testing of projection mapping alignment across five projectors:

Simulating driving through a forest – making the vehicle invisible:

One of the concepts explored with an immersive in-vehicle experience was to see whether the passengers could feel more connected to their environment (in whatever way that might be). This particular phase of the prototype shows my attempt to make the physicality of the car invisible. The motivation for this was to provide a more intimate experience between vehicle passengers and the environment. The image above shows an early exploration of this concept.

In-vehicle testing of various media and utilitarian concepts:

User Testing with UC Berkeley's BRAVO research lab prototype:

The images here show a demo of the prototype integrated into the Airstream trailer that the students presented to my colleagues from the AILSV research lab. The students used the airstream as a blank canvas to prototype future mobility experiences. Students employed a simpler projection mapping scheme using a single projector. The concept shown was intended to simulate a design intervention enacted by an autonomous vehicle. The vehicle would prompt the driver to take over vehicle operation while the vehicle was in traffic on the Bay Bridge. The interior could then transition to enable the driver, now transitioned to a passive role, to sit back and, instead of looking at the gridlock of Bay Bridge traffic, to be transported to a calming view of being out on a yacht, relaxing at sea.

I performed multiple studies to assess the feasibility of more than seven different projector models based on their size, heat generation, projector throw characteristics, sound during operation, image quality, imaging engine, and display technologies.

Since this prototype would be demoed on the street during day hours, the selected projector needed sufficient lumen output to provide reasonably cinematic projection quality during day-time operation. This was made additionally difficult because the projectors also needed to be non-intrusive, small enough to be invisible to passengers in the vehicle.

As the project progressed, the requirements also shifted. The system grew from three projectors to six projectors, from initially using multiple raspberry pis to running the entire system on a single desktop computer integrated into the rear of the test vehicle. Projectors and wiring were integrated into the vehicle itself; panels were removed and modified to accommodate the routing of these components.

The initial testing set up was integrated into the vehicle roof, modular, and allowed for flexibility in projector positioning translation along the x and y axes and some rotation. This design enabled quicker testing because it allowed different projectors to be swapped in and out of the system and quickly repositioned. The projectors’ positions were fixed once I selected the final projector models.

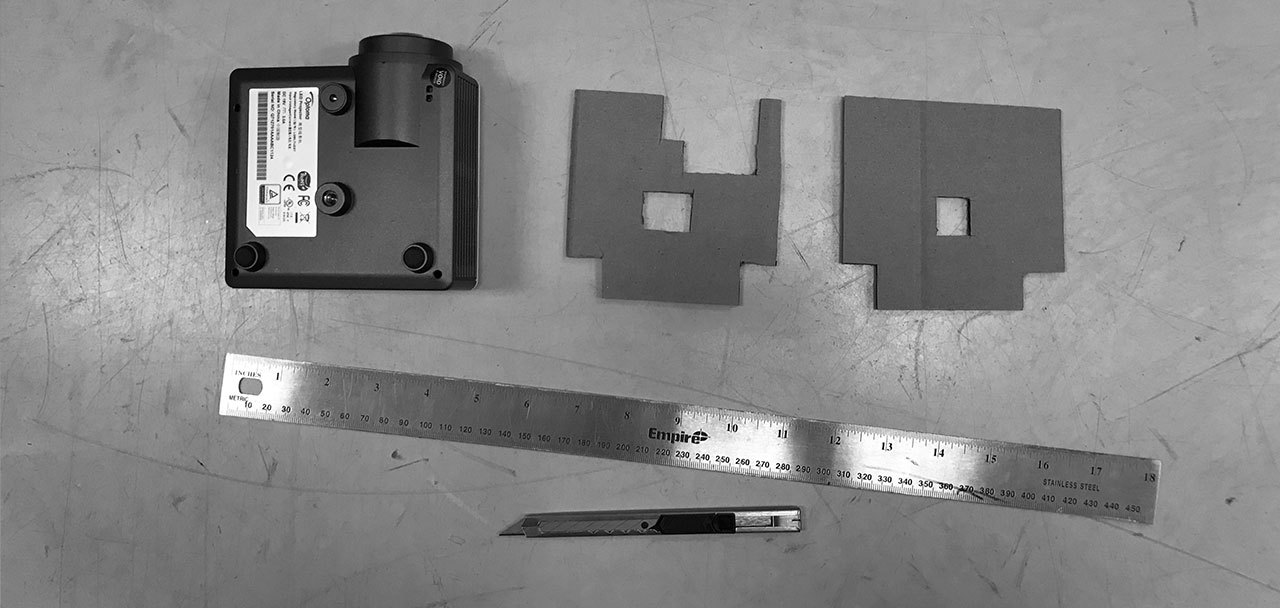

I saved time in the intial prototyping phase by creating low-fidelity interfaces, such as the one below. This projector shown had odd surface features on its base, making it difficult to mount securely. I created this layering of cardboard to have a flat interfacing surface.

The project was intended for an on-road demo; thus, much of the testing was also done outdoors. I tested for projector efficacy in typical day operation. I determined the required specifications of the projectors by using formulas provided by the Society of Motion Picture and Television Engineers to achieve near-cinematic image quality. The results were somewhat disappointing because it confirmed that the projectors required did not yet exist in the compact form factor we needed for in-vehicle integration.

To enable on-road testing and live-demo, I designed the computer, projector systems, and data collection to be powered by a single external battery bank that could be easily integrated into the system as needed.